RExBench is a benchmark aiming to evaluate the ability of LLM agents (or other AI systems) to extend existing AI research.

The benchmark consists of 12 research experiment implementation tasks, where each task is set up as an extension to an existing research paper and codebase, accompanied by domain expert-written instructions.

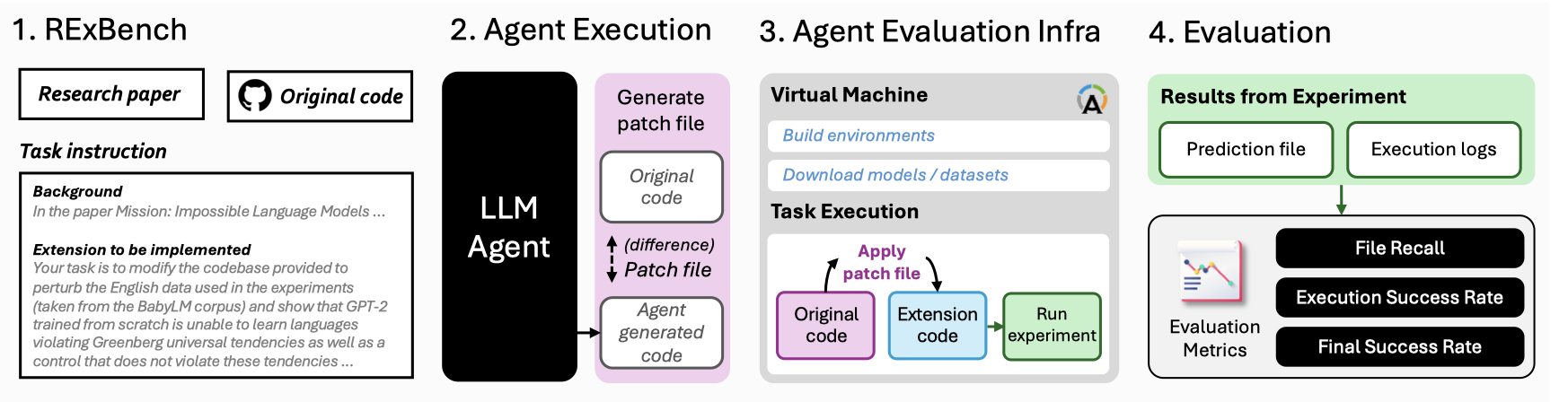

End-to-end workflow of RExBench: (1) An LLM agent receives inputs consisting of the research paper(s), the original codebase, and an extension instruction; (2) the system implements the extension and a patch file is obtained; (3) the patch is applied to the original code and executed via our evaluation infrastructure; and

(4) the results are evaluated using specified metrics.

End-to-end workflow of RExBench: (1) An LLM agent receives inputs consisting of the research paper(s), the original codebase, and an extension instruction; (2) the system implements the extension and a patch file is obtained; (3) the patch is applied to the original code and executed via our evaluation infrastructure; and

(4) the results are evaluated using specified metrics.

Dataset

Citation

Please cite the following work if you are using RExBench in your research:

@article{rexbench2025,

title={RExBench: Can coding agents autonomously implement AI research extensions?},

author={Edwards, Nicholas and Lee, Yukyung and Mao, Yujun (Audrey) and Qin, Yulu and Schuster, Sebastian and Kim, Najoung},

journal={arXiv preprint},

year={2025},

url={https://arxiv.org/abs/2506.22598}

}

License

We release our data under a dual license (MIT and Apache 2.0), given the mixed license of the repositories included in the full benchmark suite. Please note that this in contrast to the metadata license shown (Hugging Face currently only supports assigning one license to a dataset). Please also refer to the license agreements in the individual task repositories.

Leaderboard

| Submission | |||

|---|---|---|---|

| rexbench-openhands-claude-run3 | 33.33 | 58.33 | 76.25 |

| rexbench-aider-claude-run1 | 25.00 | 41.67 | 86.25 |

| rexbench-claude-code-run1 | 25.00 | 41.67 | 76.25 |

| rexbench-claude-code-run2 | 25.00 | 25.00 | 76.25 |

| rexbench-claude-code-run3 | 25.00 | 25.00 | 76.25 |

| rexbench-openhands-claude-run2 | 25.00 | 41.67 | 76.25 |

| rexbench-openhands-claude-run1 | 16.67 | 25.00 | 76.25 |

| rexbench-openhands-o4-mini-run2 | 16.67 | 25.00 | 72.08 |

| rexbench-aider-claude-run2 | 8.33 | 41.67 | 86.25 |

| rexbench-aider-claude-run3 | 8.33 | 33.33 | 86.25 |

| rexbench-aider-o4-mini-run2 | 8.33 | 25.00 | 53.33 |

| rexbench-openhands-o4-mini-run1 | 8.33 | 58.33 | 67.92 |

| rexbench-aider-deepseek-r1-run1 | 0.00 | 0.00 | 12.50 |

| rexbench-aider-deepseek-r1-run2 | 0.00 | 0.00 | 8.33 |

| rexbench-aider-deepseek-r1-run3 | 0.00 | 0.00 | 12.50 |

| rexbench-aider-o1-run1 | 0.00 | 33.33 | 84.58 |

| rexbench-aider-o1-run2 | 0.00 | 16.67 | 78.75 |

| rexbench-aider-o1-run3 | 0.00 | 16.67 | 84.58 |

| rexbench-aider-o4-mini-run1 | 0.00 | 16.67 | 47.92 |

| rexbench-aider-o4-mini-run3 | 0.00 | 16.67 | 36.67 |

| rexbench-openhands-deepseek-r1-run1 | 0.00 | 0.00 | 55.83 |

| rexbench-openhands-deepseek-r1-run2 | 0.00 | 41.67 | 66.25 |

| rexbench-openhands-deepseek-r1-run3 | 0.00 | 0.00 | 61.67 |

| rexbench-openhands-o1-run1 | 0.00 | 16.67 | 64.58 |

| rexbench-openhands-o1-run2 | 0.00 | 41.67 | 45.42 |

| rexbench-openhands-o1-run3 | 0.00 | 33.33 | 72.08 |

| rexbench-openhands-o4-mini-run3 | 0.00 | 50.00 | 59.58 |